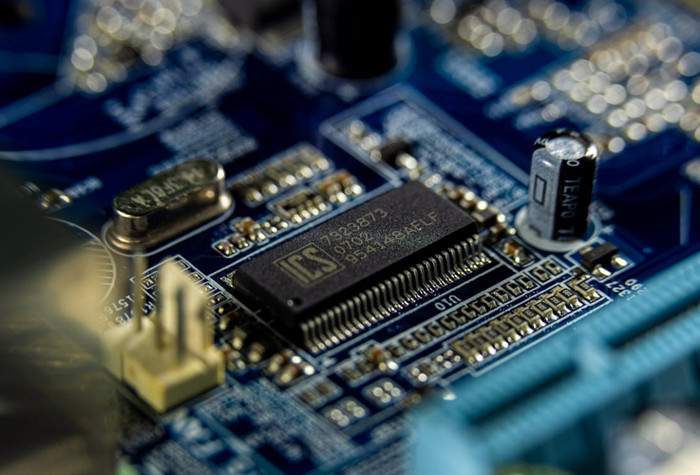

Soft2bet and the unseen hardware that makes instant play possible

When you press play and a game loads instantly, it feels like magic. But behind the curtain is a stack of very real silicon, cables, firmware, and dashboards that either keep the experience crisp or let it wobble. Covering consumer tech for years has trained me to obsess over FPS and thermals; working around large, always-on platforms like soft2bet taught me to care even more about tail latency, observability, and how storage behaves under weird traffic spikes at 2 a.m.

I stumbled across the Uri Poliavich bio and it nudged me to think about leadership choices that ripple down to the rack. Vision is nice, but hardware discipline is what makes vision feel instantaneous to end users. Here is how modern iGaming stacks stay fast and sane, and what any builder—whether you run a lab server or architect production—can borrow.

Latency is a feature

You can throw cores at a problem and still lose users if your p95 and p99 latencies are sloppy. For real money play, the platform must feel immediate, predictable, and fair—no stutters when odds update or when a live event settles. The trick is to treat latency like a product requirement, not a consequence. That means measuring it where it happens, shaving copies, and never doing blocking work on the request path. Async I/O, efficient serialization, and connection reuse beat raw clock speed more often than not.

Storage architecture that survives spikes

Bursty writes and small random reads will expose weak disks in minutes. NVMe is table stakes, but topology matters more than logos. Pair fast TLC drives with enough over-provisioning to keep write amplification in check. Use separate pools for hot state and cold history so your ledger updates never wait behind analytics. Replicate across failure domains—not just nodes—to avoid correlated hiccups. And rehearse restores; a backup you cannot restore quickly is a story, not a strategy.

Network paths that do not flinch

Packets win or lose the UX. Keep paths short, stable, and boring. Inside the data center, flatten the network and pin critical services to predictable routes. Across regions, pick transit that prioritizes consistency over theoretical bandwidth. For real-time odds or live dealer video, micro-buffers and aggressive congestion control are your friends. The user will forgive a slightly lower bitrate; they will not forgive jitter when money is on the line.

Dashboards should show intent, not decoration. Start with four signals that never lie: saturation of storage queues, tail latency of core APIs, error budgets by customer cohort, and cache hit ratios for the hottest keys. Everything else is a drill-down off those. Alert on symptoms users feel, not on resource counters that recover by themselves. And carve dashboards per market, because midnight in Toronto is not midnight in Malmö.

Security at the hardware layer

Compliance teams talk policy; attackers test physics. Lock down firmware versions, enforce secure boot, and rotate hardware security module keys on a schedule tied to audits, not moods. Keep power and cooling redundant and monitored because side effects show up there first. If you ever need to prove fairness, being able to show signed attestations from trusted hardware is worth more than a page of cryptography talk.

Edge is not a buzzword

For platforms with global audiences, proximity beats perfection. Push static assets, device-specific bundles, and precomputed recommendations to the edge. Keep dynamic decisions close to the source of truth, but minimize roundtrips. A tiny function at the edge that short-circuits an obviously invalid request saves more than bandwidth; it saves the user from feeling delay you could have predicted.

A pragmatic checklist for editors and builders

- Map the request path for the three most common actions and remove one network hop from each.

- Split hot and cold data. Put money-touching writes on the fastest, quietest pool you have.

- Track p95 and p99 for user actions, not just service calls. Users experience workflows, not microservices.

- Burn in new NVMe drives with synthetic bursts that mimic weekend peaks before they enter the cluster.

- Use feature flags to decouple deployment from release; roll back in seconds, not meetings.

- Keep on-call runbooks short, specific, and hardware aware. If it needs a diagram, add the diagram.

- Budget for observability as if it were storage. You cannot fix what you cannot see.

- Test failover during live traffic windows you actually fear, not during quiet Tuesdays.

Why this matters beyond casinos

Even if you never ship a game, the lessons translate. Any application that feels “instant” is quietly excellent at persistence, queuing, and edges. The reason a session resumes flawlessly after a dropped connection is that someone engineered state so it can be rehydrated quickly, with graceful timeouts and idempotent writes. The reason promotions or seasonal events do not melt the stack is that capacity was planned for fan-out and cache churn, not average load.

The human part of the hardware story

It is easy to romanticize algorithms and forget the people who wire racks and label cables. The best teams I have watched pair ambition with maintenance. They schedule patch windows and actually use them. They deprecate services before the ecosystem grows barnacles. They treat a half-second saved on signup like a press release that never needed words. When platforms in the soft2bet class get this right, the result is simple to the player and satisfying to anyone who has ever tightened a loose SFP.

The glamour goes to the UI, but the trust lives in the path a packet travels and the millisecond an NVMe controller decides how to place your write. If you want your product to feel instant, start where the electricity meets the plan.