Google Makes Its Scalable Supercomputers for Machine Learning Publically Available

Google has made publically available its Cloud TPU v2 Pods and Cloud TPU v3 Pods to help machine learning (ML) researchers, engineers, and data scientists iterate faster and train more capable machine learning models.

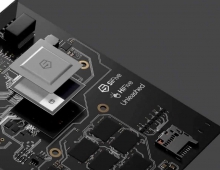

To accelerate the largest-scale machine learning applications, Google created custom silicon chips called Tensor Processing Units (TPUs). When assembled into multi-rack ML supercomputers called Cloud TPU Pods, these TPUs can complete ML workloads in minutes or hours that previously took days or weeks on other systems.

Today, for the first time, Google Cloud TPU v2 Pods and Cloud TPU v3 Pods are available in beta to ML researchers.

Google Cloud is providing a full spectrum of ML accelerators, including both Cloud GPUs and Cloud TPUs. Cloud TPUs offer competitive performance and cost, often training cutting-edge deep learning models faster while delivering significant savings.

While some custom silicon chips can only perform a single function, TPUs are fully programmable, which means that Cloud TPU Pods can accelerate a wide range of ML workloads, including many of the most popular deep learning models. For example, a Cloud TPU v3 Pod can train ResNet-50 (image classification) from scratch on the ImageNet dataset in just two minutes or BERT (NLP) in just 76 minutes.

A single Cloud TPU Pod can include more than 1,000 individual TPU chips which are connected by an ultra-fast, two-dimensional toroidal mesh network. The TPU software stack uses this mesh network to enable many racks of machines to be programmed as a single, giant ML supercomputer via a variety of flexible, high-level APIs.

The latest-generation Cloud TPU v3 Pods are liquid-cooled and each one delivers more than 100 petaFLOPs of computing power. In terms of raw mathematical operations per second, a Cloud TPU v3 Pod is comparable with a top 5 supercomputer worldwide (though it operates at lower numerical precision).

It’s also possible to use smaller sections of Cloud TPU Pods called “slices.” ML teams ofter develop their initial models on individual Cloud TPU devices (which are generally available) and then expand to progressively larger Cloud TPU Pod slices via both data parallelism and model parallelism to achieve greater training speed and model scale.